GPT-3 shows us how far they have come to cloning humans – in the digital world.

The field of Machine Learning has made an incredible advancement.

And on the top of the podium is Open AI’s latest open-source language prediction model. With the latest version, they are a lot closer to achieving what they had set out for,

To create a language that sounds more human than the humans themselves.

Such a breakthrough is not a simple feat. Backed by its founders Elon Musk, Sam Altman, and Jeff Weiner – a dream team of innovators, Open AI swung hard to push the boundaries of AI and discover the path to safe artificial intelligence.

We assume the word has reached all ears about this latest development. So if you are on the hunt to know some answers, or what to expect from this so-called beginning of the AI apocalypse, follow the trail!

Can anybody tell me what Open AI’s GPT-3 is?

GPT-3 is the third of its kind (after GPT-2 and GPT-1, based on Google Transformer algorithm), a Natural Language Processor(NLP) released by Open AI – an artificial intelligence research laboratory whose sole mission is to outperform humans at most economically valuable work and benefit all of humanity.

Now before we delve into the ergonomics, take a look at its text-book definition. We hope it doesn’t scare you.

“It’s a task-agnostic Natural Language Processing that requires minimal fine-tuning, meaning that it’s adaptive enough to perform text-generation, quality queries, and more with minimal user adjustments.”

It seems like the tech community can’t get enough of it considering the frenzy it is causing in the Twittersphere. And at this rate, it is racing its way to becoming the biggest thing since bitcoin. But even so, there can be a lot of unnecessary hype that can cloud the obvious errors.

It is all but too similar. Remember the time when the 4th installment of the Transformers movie franchise came out, and the people (in the movie, seemingly tired of transformers destroying cities in their incessant battles with each other) took to creating their own transformers? The result was an element called, transformium and it was the game-changing innovation responsible for creating human-made Transformers that vested to the interests of people.

Not to bring a bad light on humans, but the world of Machine Learning too has in a swirl released its latest language prediction model which is way better than its predecessor.

Curbing human errors is one of the reasons for its existence. This means that the likelihood of human jobs getting eradicated is now even more plausible.

But it opens a lot of avenues too, especially for the unskilled. What was only science fiction years ago can now be used by students from their dorms.

How good is GPT-3?

To begin with, GPT-3’s language capabilities are breathtaking.

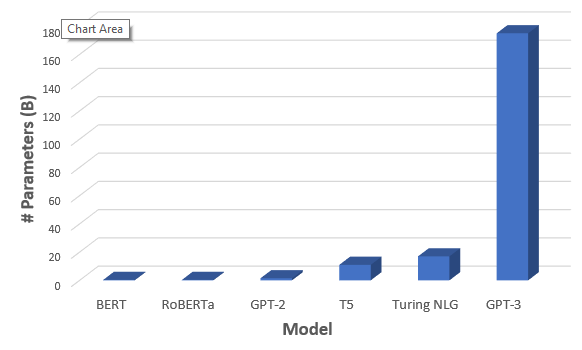

The model has 175 billion parameters compared to GPT-2’s 1.5 billion.

When prepared by a human, it can compose thoughtful business memos, generate functioning code, write creative fiction, and much more. Its core function is to be an extremely sophisticated text predictor. A human gives it a chunk of text as input, and GPT-3 generates its best shot as to what the next bunch of text should be. It then repeats this process – taking the original input together with the newly generated text, treating that as new input, and generating a subsequent bunch of text- until it reaches a length limit.

This means that GPT-3 can also produce imitations. Mario Klingemann, an artist who works with Machine Learning, shared a story, “The importance of being on Twitter”, written in the style of Jerome K. Jerome. Klingemann revealed to the users that all he gave the AI was the title, the author’s name, and the initial ‘IT’.

Not so far away, Sharif Shameem, the founder of debuild.co had used GPT-3 to generate code. All he had to do was enter the text that summed up the gist of the product and the generator handed out the code.

The exploits of GPT-3 doesn’t end there. There is even an entire article about GPT-3 written by GPT-3!

All of this is possible because it takes a huge volume of words from the internet, half a trillion in the case of GPT-3, with over 175 billion parameters.

‘Parameters are what measures the complexity of the model and how accurate its results are.

Image credit: mc.ai

In simple words, it goes into the inner recesses of the internet to gather insights in massive datasets.

What the buzz won’t let you see

As with any incredibly powerful tool, this model too can be used for evil.

Text generation can be used for driving false propaganda, fake news, and helping certain types of bots. Even a striking resemblance to deep fake is tough to shrug off in this case.

Buried in the hype is its susceptibility to basic errors that an average human would never commit. Believe it or not, Sam Altan, founder of Open AI got on twitter to calm people down.

Even with its excellent engineering, it cannot reason abstractly – it lacks true common sense.

Kevin Lacker in his evaluation of GPT-3 conducted a Turing test in which he was able to stump AI by asking it questions no humans would ask.

All the model had to do was spot the nonsensical question or simply answer, “I don’t know”, which means that artificial intelligence still struggles when it comes to common sense.

The fact is, Open AI’s GPT-3 is only an early glimpse of the future but still prone to failure.

Future

As for now, Open AI wants to explore the capabilities of GPT-3 and has offered beta access to developers.

Plans are still in the pipeline to turn it into a commercial product later this year and businesses can soon benefit from a paid-for subscription to the AI via the cloud.

It is, however, safe to assume that GPT-3 is now the closest to convincingly pass off as a human. What remains are the applications researchers will find it useful for.

If you ask us, we are binge-watching all of the Terminator and Blade runner movies. Because the future is turning out exactly how it was predicted to, and we want to be prepared.

The famous interview line from the Bladerunner movie was enough to irk the hell out of replicants.

Open AI’s GPT-3 found it amusing.

After credits

The world of AI is incredibly competitive. This leads us to the fact that Open AI’s not the only heavyweight calling the shots. The repertoire of Microsoft, Nvidia, and Google who although are trumped by Open AI at this moment was in fact very much on the driver’s seat a few months back.

This means only one thing. AI will venture onto new territories as they evolve and Open AI is just another enabler in a long-list of Machine Learning companies.

What the human race can achieve with the advent of AI is baffling and it can only go one direction from here. To quote Elon Musk when he appeared on the podcast, Joe Rogan Experience, “AI is not necessarily bad for humans, it may just be out of human control”.

Be it robot armies, Colonizing Mars, Time travel, or a humanoid better than the humans, we’ll have to wait for the next update.